Generative AI is incredible—you're likely already using it every day to create images, draft text, or brainstorm ideas. But when it comes to letting AI handle real-world tasks that require interacting with external systems, things get tricky. On their own, LLMs can't directly talk to databases, APIs, or digital services.

That's where the Model Context Protocol (MCP) comes in. MCP makes it possible for AI models to connect with the outside world in a standardized, secure way.

In this tutorial, we'll show you how to build an AI-powered application that tackles two key challenges:

- Enabling the model to make smart decisions

- Connecting it smoothly to the right tools and APIs

By combining LangChain and MCP, you'll see how to create a powerful setup that bridges LLMs with external APIs—turning your generative AI experiments into practical, real-world applications.

What's LangChain?

LangChain is a framework that helps you build apps using large language models (LLMs). It gives you some handy abstractions:

Chains

Think of these as ordered sequences where your model calls functions, APIs, or even other models.

Agents

These LLMs make choices about what "tool" to use next—so your app can react and even plan its steps.

Memory

Store and recall context over the course of a user's session or conversation.

Bottom line: LangChain helps your LLM app reason, plan, and execute complex tasks without losing its mind.

What's MCP?

MCP stands for Model Context Protocol. It's an open protocol for exposing APIs, tools, and data sources to models in a standardized way. Instead of writing a connector every time you want to link to a new service, MCP acts as a universal plug:

- Standardized Interface: Every tool looks the same to the model, regardless of what's underneath.

- Reusability: If a tool speaks MCP, you can slot it into any framework that supports MCP.

- Security & Governance: MCP sits between your LLM and your sensitive systems, adding access controls or logging (handy for enterprise users).

Imagine MCP as the "USB port" for AI models—one consistent way for your AI app to talk to a variety of services.

Why Combine LangChain + MCP?

Simple:

- LangChain gives you powerful orchestration (chains, agents, memory)

- MCP keeps all your tool integrations clean, reusable, and consistent

Together, you get:

- Faster app development

- Easy scaling

- Much less custom glue code

Real Example: Use a Chatbot to Control Spotify

Let's walk through what this looks like in practice. Say you want to make a chatbot that can control your Spotify account. Here's the high-level workflow:

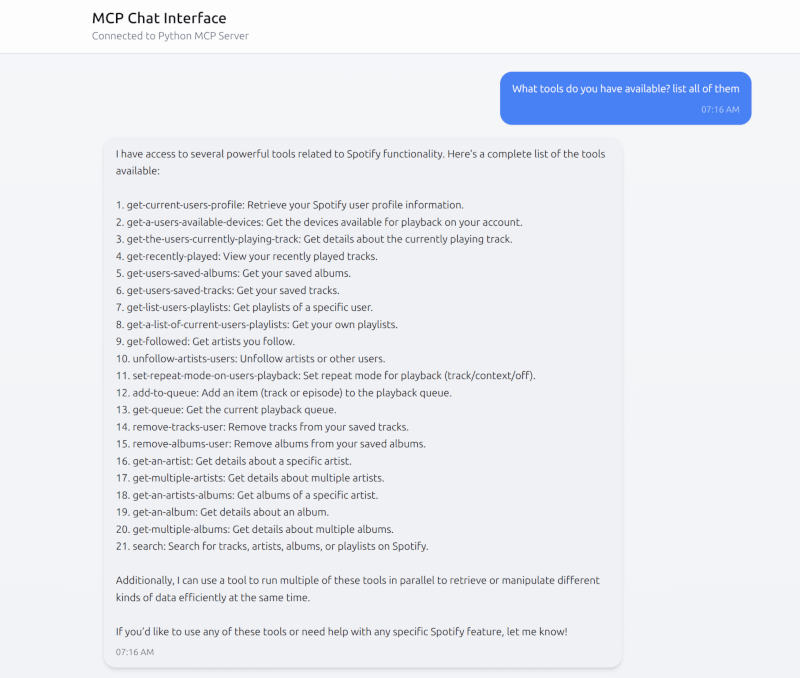

- Expose the Spotify API via MCP: Make a custom MCP tool that wraps the Spotify API (for things like searching for tracks, managing playlists, etc.).

- Connect via LangChain: Use a LangChain agent that can call your new Spotify-MCP tool whenever a user asks for music-related stuff.

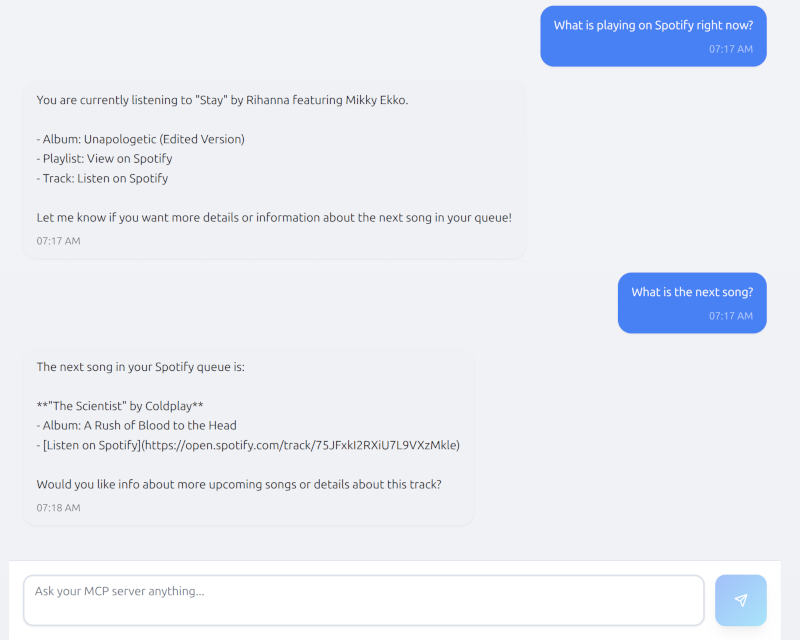

- Add a Chat Frontend: Build a simple chat UI (web or mobile) where users can send natural language requests like "Play my workout playlist" or "Find me some jazz music."

How it all works: The chatbot translates the user's request, routes it through MCP to Spotify, and then sends the response back to the chat UI.

What do you get?

- ✅ Chat with Spotify in plain English

- ✅ Integration is tidy, portable, and secure thanks to MCP

- ✅ A user-friendly, conversational experience

Step-by-step: Build Your MCP Server & Python Client

Step 1: Build MCP with APICHAP´s MCP-Builder.ai

1. Get the Spotify OpenAPI Spec: Download this from GitHub .

2. Upload to APICHAP mcp-builder.ai: After making your account, upload your Spotify OpenAPI spec to convert it into an MCP server.

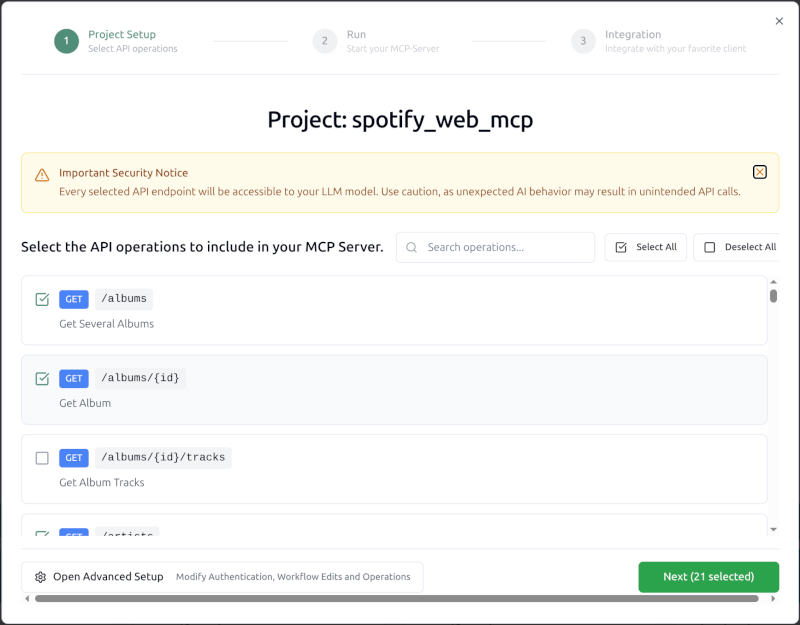

3. Pick the API Operations: Inside your new project, select the Spotify API actions you want (search, play, manage playlists, etc.).

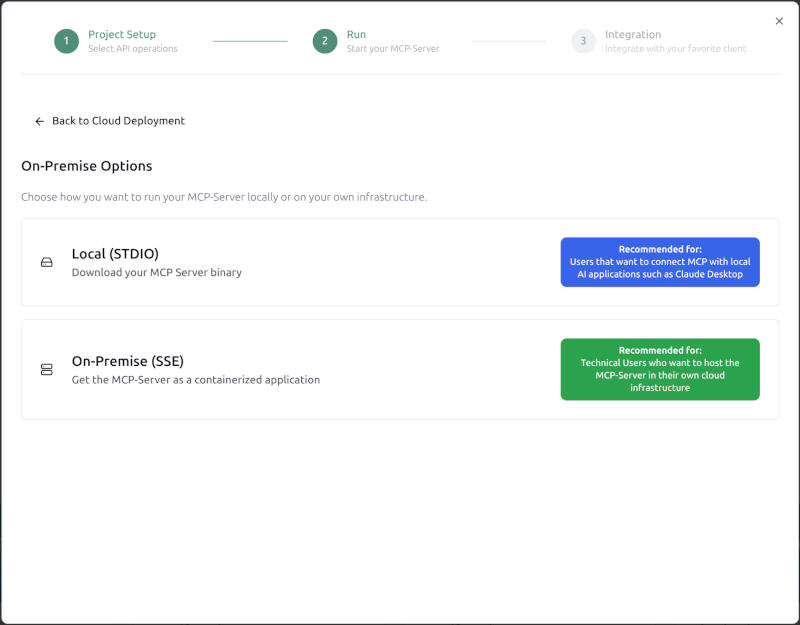

4. Download the SSE Runnable Option: This gives you a ready-to-run MCP server.

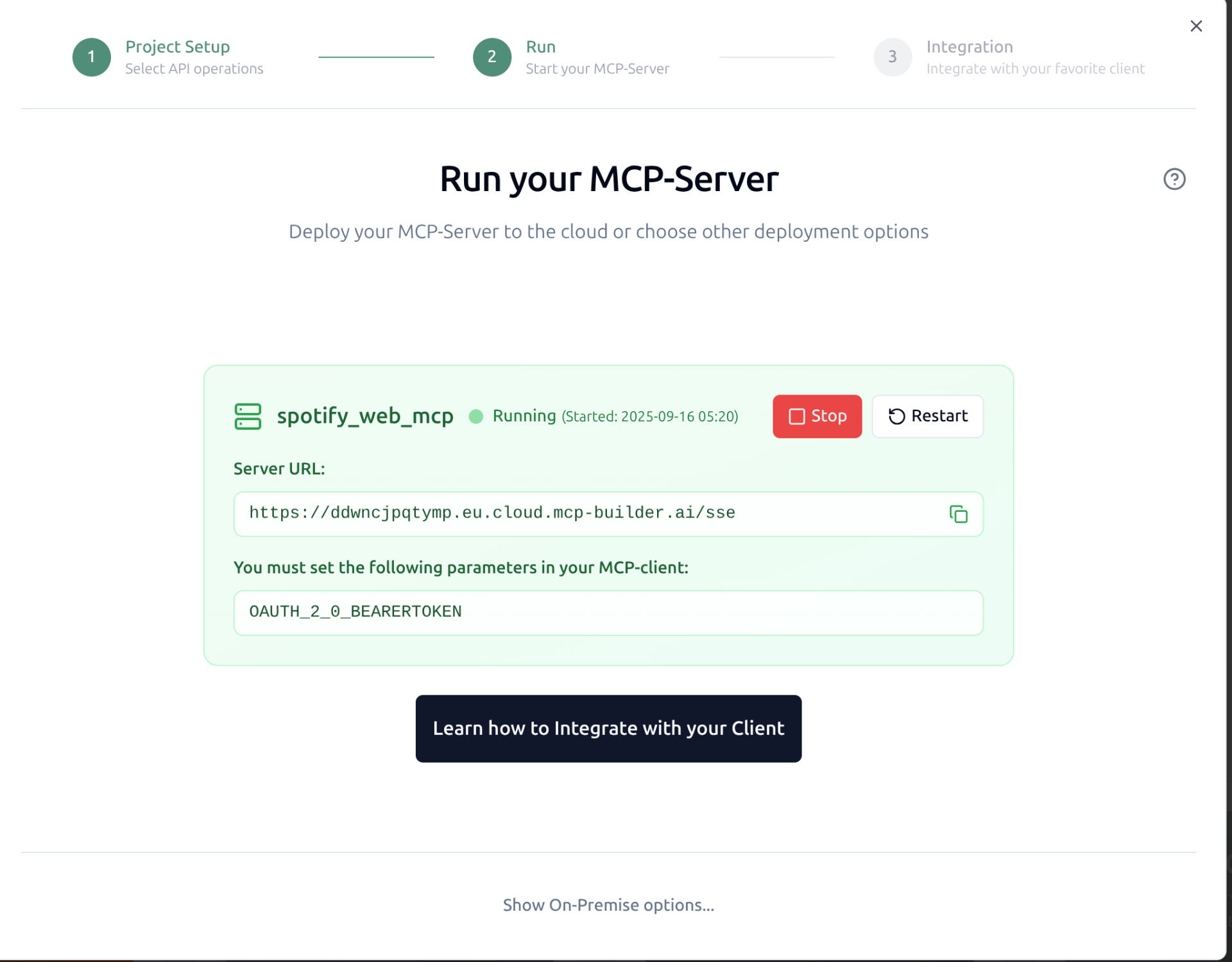

5. Run the MCP Server: Run your MCP server using the APICHAP cloud server.

🎉 That's it - you just built your first MCP-Server! HURRAY!

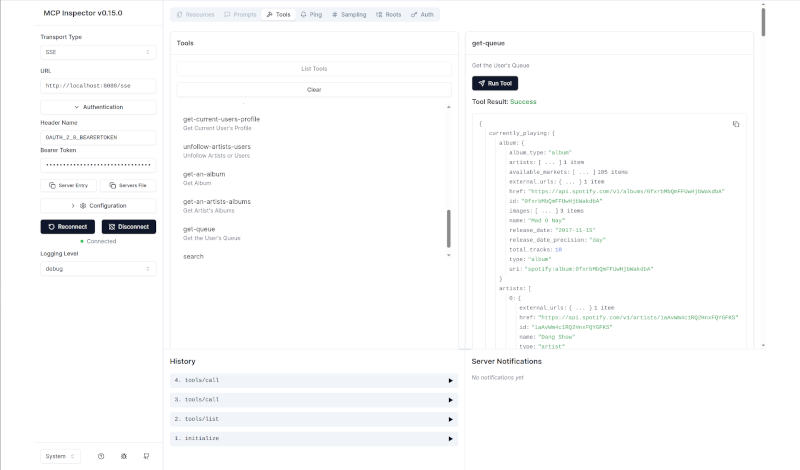

6. Test with MCP Inspector: Use your Spotify bearer token to authenticate. Try out your chosen endpoints directly and see the results returned by your new MCP server.

Step 2: Build an MCP-Client and Chatbot Web interface using Python and LangChain

After completing the first step and assuring our MCP server is running smoothly, it's time to move on to the client side. This part is where the fun happens: we'll build a simple web interface, set up the MCP client, and connect everything up to an LLM.

Since version 0.3, LangChain has supported acting as an MCP client. That means we can use it not just for chains and agents, but also to directly connect our app with an LLM (like OpenAI's GPT-5) and integrate it with our MCP server. In other words, LangChain takes care of the orchestration, while MCP gives us the standardized bridge to external tools and APIs.

To make this work, you'll wire up your Python client so it can talk to the MCP server. Once that connection is in place, your LLM can start making smart calls to your MCP-exposed tools right from the chat frontend.

We'll keep this example light here, but you can find the full working code in our GitHub repo .

Connect to the MCP Server

The connection is handled by a class like MCPClientSSE, which uses Server-Sent Events (SSE) to maintain a session with the MCP server.

- Authentication uses your OAuth bearer token (read from .env)

- The

MCPClientSSEgives you easy methods:list_tools()— see what tools are available from MCPcall_tool(name, args)— run a specific tool & pass arguments

This setup code runs in your FastAPI "startup" event, so your MCP connection is ready before handling chatbot requests.

mcp_client = MCPClientSSE(SSE_URL, token)

await mcp_client.aconnect()Build LangChain Tools from MCP

Once LangChain MCP is connected, query the MCP server for its tools. Each one comes with JSON Schemas describing inputs, outputs, description, etc. These schemas are turned into Pydantic models (so you get validation in Python), then wrapped as LangChain tools:

lc_tools = await build_langchain_tools_from_mcp(mcp_client)Now, every MCP tool is a LangChain tool you can use in your agent!

Create the LangChain Agent

You're ready to connect it all up:

agent = create_react_agent(

model=init_chat_model("gpt-4.1", model_provider="openai", api_key=openai_key),

tools=lc_tools,

)Your agent uses GPT-5 via OpenAI's API and now has access to every Spotify tool you exposed via MCP. The agent decides when to call a tool or when to reply directly, based on the user's request.

Ultimately, convert this into a FastAPI service, and your chat frontend can simply connect to your API endpoint.

Note: To get a Spotify API token, you'll need to go through their auth flow. Set your scopes based on what you want to let the chatbot do.

What's Awesome About This?

⚡ MCP Generation

You can build your MCP using MCP-Builder.ai in seconds.

🔌 Standardization

Tools work across frameworks—reuse them anywhere.

🚀 Rapid Development

LangChain instantly gets access to anything you expose via MCP.

🔄 Flexibility

Add or swap tools without causing headaches.

🧹 Maintainability

Clean separation between "tool adapters" and "LLM logic."

🔒 Security

MCP is a great gatekeeper for sensitive data or privileged actions.

😊 Great UX

Chatbot frontend lets users interact most naturally.

Wrap-up

If you're building a scalable AI assistant that needs to juggle different tools, LangChain + MCP is a killer combo. Adding a chat UI on top makes it all accessible and fun for users, too. For quick-and-dirty prototypes, you might not need this much structure—but if you plan to grow or connect to lots of APIs, you'll soon appreciate the flexibility and order.

With this Spotify + MCP + LangChain chatbot, you get a sneak peek into the future: one where LLMs can reason, execute, and orchestrate, all while plugging into new tools as easily as snapping in a USB stick.

Ready to Build Your Own MCP Server?

Start building AI agents connected to any API in minutes with MCP-Builder.ai

Did you try it? Want to see code samples or chatbot demos? Check out our GitHub repo or join our community!

About the Author

Negar is a passionate Machine Learning Engineer working with the software team at MCP-Builder.ai. Her journey into AI began during her studies, where she quickly became fascinated by the endless possibilities of machine learning and its potential to transform industries. Since then, she has dedicated herself to building intelligent systems, experimenting with new approaches, and sharing her insights with the broader tech community.