RAG vs MCP Explained: The Battle of Modern AI Architectures

Understanding the key differences between two trending AI approaches: RAG vs MCP

It seems like new acronyms are coming up every week in the quickly developing field of artificial intelligence. RAG (Retrieval-Augmented Generation) and MCP (Model Context Protocol) are two of those terms that are currently trending worldwide. While both strategies assist AI agents and applications in incorporating real-time data into a Large Language Model (LLM), MCP goes one step further by providing functionality beyond data retrieval.

Let's look at this: Who is winning the race between RAG vs MCP?

What is RAG?

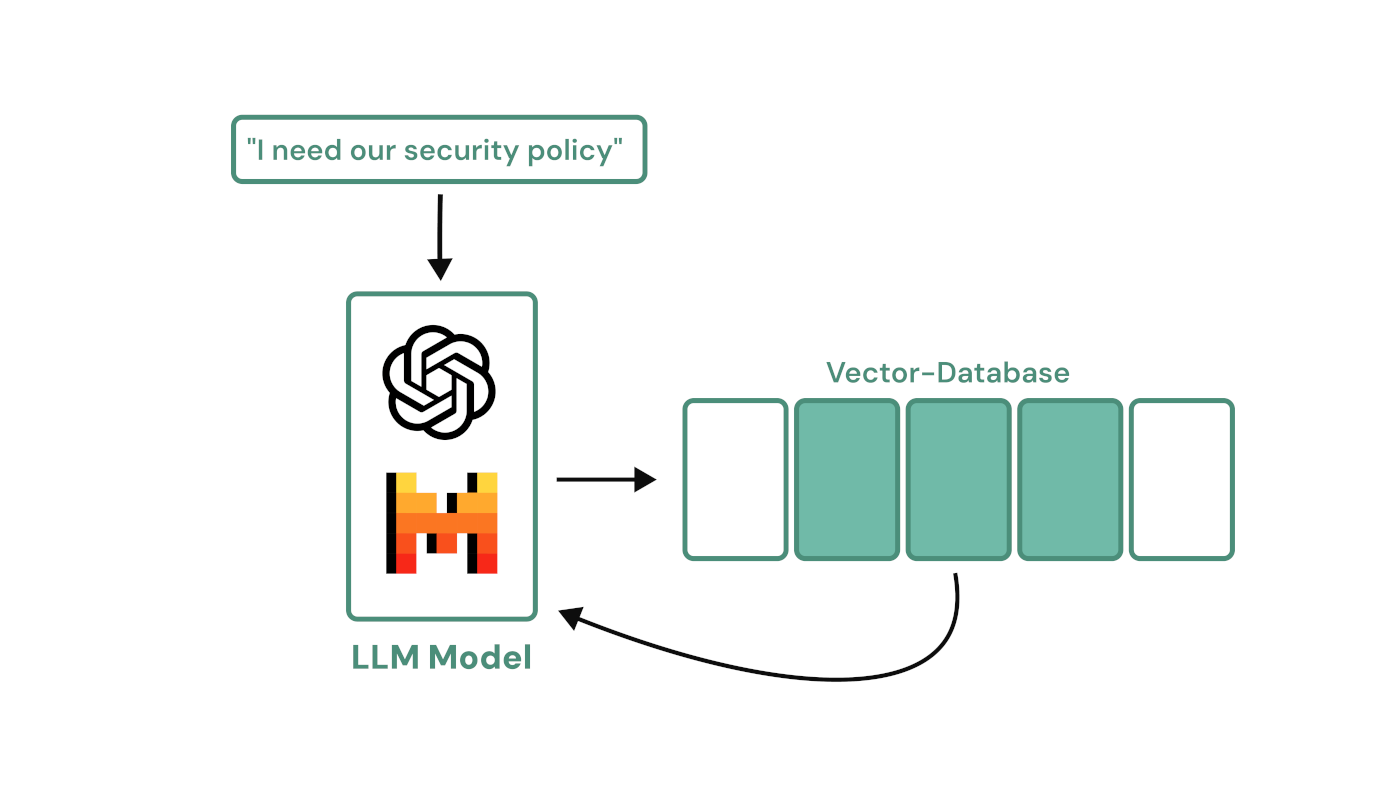

Retrieval-Augmented Generation, or RAG, is a type of AI architecture that combines finding knowledge and writing text using LLMs. Instead of depending only on what a Large Language Model (LLM) has already been trained on, RAG lets the model ask a vector database for more relevant data before making a response.

In real life, RAG works like a smart semantic search engine combined with an LLM. It can look for information in all of your company's unstructured data sources. This helps find the most useful information and puts it into the model's context window every time a user asks for something. This process makes the answers more correct, up-to-date, and specific to the topic, and it also lowers the risk of hallucinations.

Note: In order to search through unstructured data preprocessing the unstructured data formats (such as PDF, Text, etc.) into an embedded vector database is required. Basically building up your RAG.

How RAG works:

Indexing / Embedding Phase:

Your papers, knowledge base, or other data are converted into vector embeddings and saved in a vector database. This enables semantic search, which allows the system to return results based on meaning rather than terms.

Retrieval Phase:

When a user submits a query, the system scans the vector database to find the most semantically similar information. This robust search layer is one of RAG's most significant advantages over traditional keyword-based systems.

Generation Phase:

The collected data is transmitted to the Large Language Model (LLM) as part of its context window, allowing it to generate an accurate, current, and context-aware answer that incorporates both the retrieved facts and the model's own reasoning.

Benefits of RAG

- Powerful semantic search via embeddings for highly relevant retrieval.

- Reduced hallucinations by grounding responses in factual data.

- Better domain adaptation for niche topics.

Limitations and Considerations

- Integration is not plug-and-play — you often need to manually connect your LLM to the retrieval system.

- Authentication & access control can be challenging — especially if your data is behind APIs or requires user-specific permissions.

- Up-To-Date Information Since the data has to be processed beforehand and indexed into the RAG a special mechanism needs to stay in place to keep the data up to date.

What is MCP (Model Context Protocol)

The Model Context Protocol (MCP) is an open standard introduced by Anthropic in November 2024 that offers a common, structured interface for AI systems, particularly Large Language Models (LLMs), to securely connect to external data sources, services, and tools.

While some have referred to MCP as the "USB-C for AI" because to its standardized, plug-and-play design, this comparison can be limited. MCP may go well beyond a simple connector, especially with Remote MCP Servers, which enable AI agents to access and organize dispersed tools and datasets across networks rather than simply locally.

MCP enables LLMs to go beyond their pretrained data and query live data from sources outside of their understanding, or even further and trigger real-world actions.

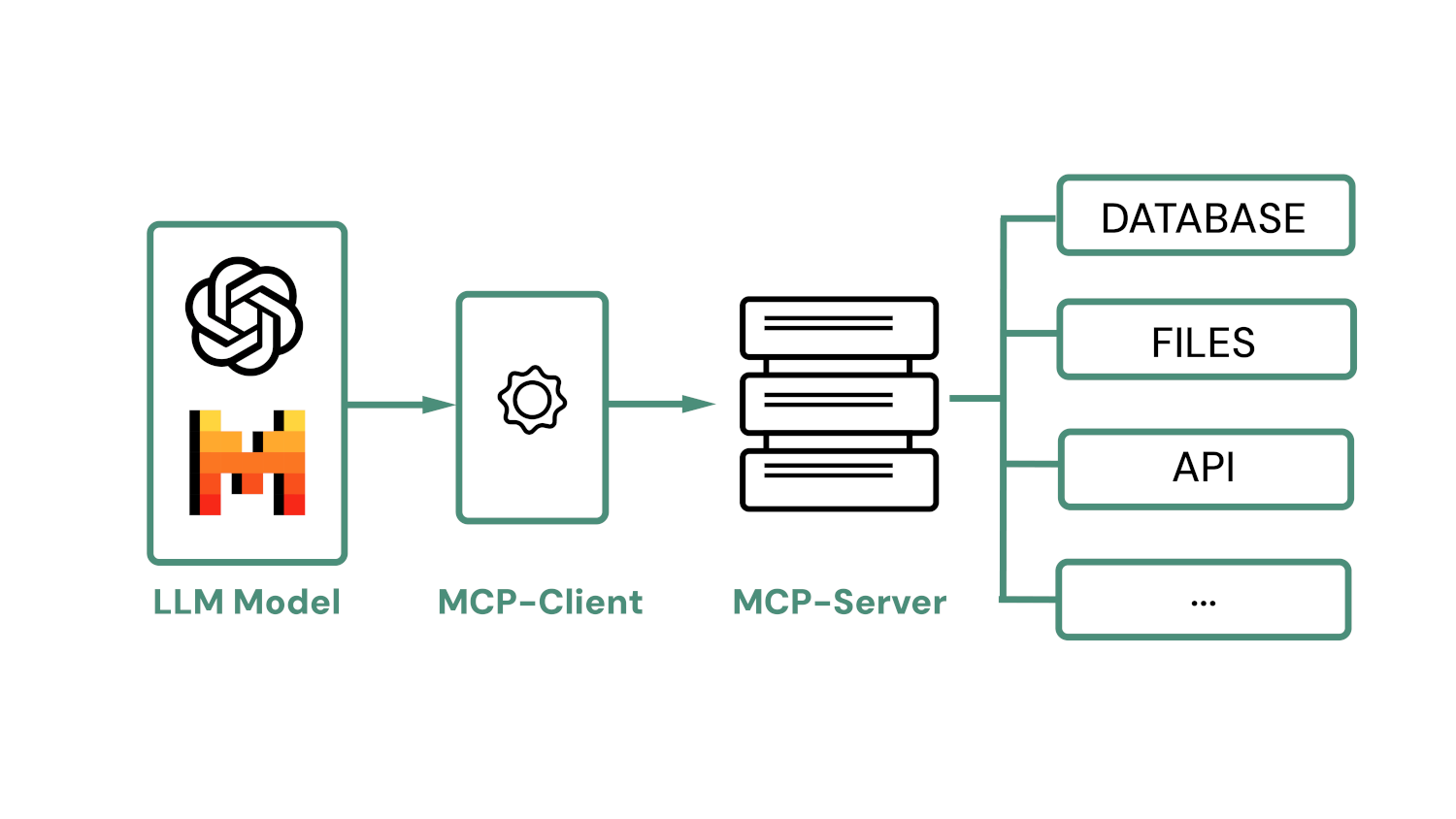

How MCP Works

MCP is based on a client-server architecture that aims to standardize how AI systems access and use external tools.

When a user gives a prompt—for example, "Find sporty events in my area this weekend"—the MCP-Client transmits the prompt to the Large Language Model (LLM) along with a list of accessible tools and resources.

The LLM then evaluates whether any of these tools are applicable to fulfilling the request. If a tool is required, the LLM does not directly contact the MCP server; rather, it directs the MCP-Client to run the tool on its behalf. This separation provides a consistent, secure, and predictable interface for AI models to communicate with local or remote capabilities. So MCP delegates decision-making power to the LLM: the LLM knows which tools are accessible and determines for itself what to call.

Important: The LLM will never call any outdoor systems (it is not like a wild robot roaming the web), but will instead notify the directly linked MCP-client of the type of data or activities it wishes to trigger, and the MCP-Client will then contact the MCP-Server.

Benefits of MCP

- International Standard for AI Connectivity – By following the MCP specification, any service can expose an MCP server and instantly become accessible to LLMs. This creates a universal, plug-and-play interface for AI integration.

- Dynamic Tool Orchestration – MCP allows LLMs to discover, switch between and combine tools on demand—fetching live data, running actions and executing multi-step workflows without hard-coded logic.

Core Differences Between RAG vs MCP

1. Purpose and Functionality

RAG (Retrieval-Augmented Generation) excels at big data search, making it perfect for enterprises who wish to provide their Large Language Models (LLMs) with access to large-scale, preprocessed datasets.

In contrast, MCP (Model Context Protocol) focuses on listing and accessing resources in real time. It is particularly effective for accessing live data directly from source systems without preprocessing, and it can connect to almost any data source instantaneously.

2. Architecture and Components

RAG typically includes:

- A vector database for storing embeddings of raw data.

- A retriever to perform semantic search.

- A server endpoint that connects with the LLM.

- Embedding pipelines to prepare raw data for retrieval.

MCP follows a client–server model:

- The MCP-Client connects to the LLM.

- The MCP-Server exposes tools, resources, and structured context.

3. Scalability and Integration

RAG often requires additional setup and preprocessing when adding new data sources, since documents need to be embedded and indexed before they can be queried.

MCP enables a standardized data pipeline that can be connected to any LLM in minutes, allowing rapid integration of new tools and live data streams without heavy upfront processing.

RAG vs MCP – Strengths and Weaknesses

Strengths of RAG

- Real-time, relevant information retrieval, powered by semantic search that finds answers based on meaning rather than exact keywords.

- Works seamlessly with both structured and unstructured data, from databases to PDFs and Confluence pages.

Weaknesses of RAG

- Setup can be more complex, requiring data preprocessing, embedding creation and vector database configuration.

- Retrieval speed can slow down when queries involve large or complex datasets.

Strengths of MCP

- Offers action execution capabilities, enabling LLMs to trigger real-world operations (e.g., sending an email, updating a database) in addition to retrieving data.

- Highly reusable—a single MCP server can make a service accessible to many different LLM models, following an official, open standard.

Weaknesses of MCP

- No built-in semantic search for large datasets—relying on whatever search capabilities the connected tool provides.

- Does not inherently process or clarify unstructured data before presenting it to the LLM.

When to use RAG vs MCP

There is no set rule on when to employ RAG or MCP. It primarily depends on the context of your use case.

MCP is the way to go if you need to perform real-world activities in systems other than your LLM or retrieve specific data from a known source. RAG comes in handy when you have a lot of unstructured data and want to provide the LLM the ability to get semantic search data.

Conclusion RAG vs MCP

RAG and MCP are not rivals; they are complementing tools designed for different purposes.

While MCP has the potential for wider adoption in the future, particularly as a standard for connecting LLMs to external systems, sharing data, and carrying out real-world actions, RAG will continue to excel in its niche of handling large, unstructured datasets and providing powerful semantic search functionality.

Another intriguing technique that some people are already investigating is connecting RAG and MCP. Setting up a RAG to search through unstructured data while using MCP to connect with the LLM. This improves reusability while also providing more power to advanced setup techniques based on authentication.

As the RAG vs MCP story evolves, we are in the early stages of these technologies. Standards will evolve, new protocols will emerge, and the industry will continue to experiment with the most effective ways to connect AI models to real-world data. One thing is certain: the race has only just started.

FAQs

1. What is the main difference between RAG and MCP?

RAG (Retrieval-Augmented Generation) focuses on retrieving useful information from massive structured and unstructured datasets, generally via semantic search, in order to improve an LLM's responses. MCP (Model Context Protocol) standardizes how LLMs interface to external tools and data sources, allowing for real-time data access and even initiating actions in other systems.

2. Can RAG and MCP be used together?

Yes. Some developers are experimenting with integrating RAG's robust semantic search capabilities with MCP's standardized connection. This enables AI models to extract useful information from enormous datasets (by RAG) and safely exchange or act on it in external systems (via MCP).

3. Does MCP replace RAG?

Probably not, MCP and RAG solve different problems. MCP is a standard that allows LLMs to query external sources and perform real-world actions, while RAG focuses on retrieving relevant information from a preprocessed semantic dataset to provide the user with more accurate answers.

4. Which is better for unstructured data—RAG or MCP?

It depends. RAG is often superior for unstructured data since it use vector databases and embeddings for semantic search, allowing the LLM to locate meaning-based matches in texts, PDFs, and other text-heavy sources. MCP does not include built-in semantic search by default, however can also connect with other systems which support that kind of functionality directly.

5. Is MCP only for Anthropic's models?

No. MCP is an open standard originally started by Anthropic and now supported by a community of developers. It can be used to connect data sources or tools to different models, such as OpenAI's GPT, Google's Gemini, or Mistral, without having to rewrite integrations.

Start Building Your First MCP Server Today

Ready to implement MCP in your AI architecture? Create powerful, standardized connections between your LLMs and external systems in minutes.